To Evaluate, or to Be Evaluated? That is the Question.

By M. Andrew Young

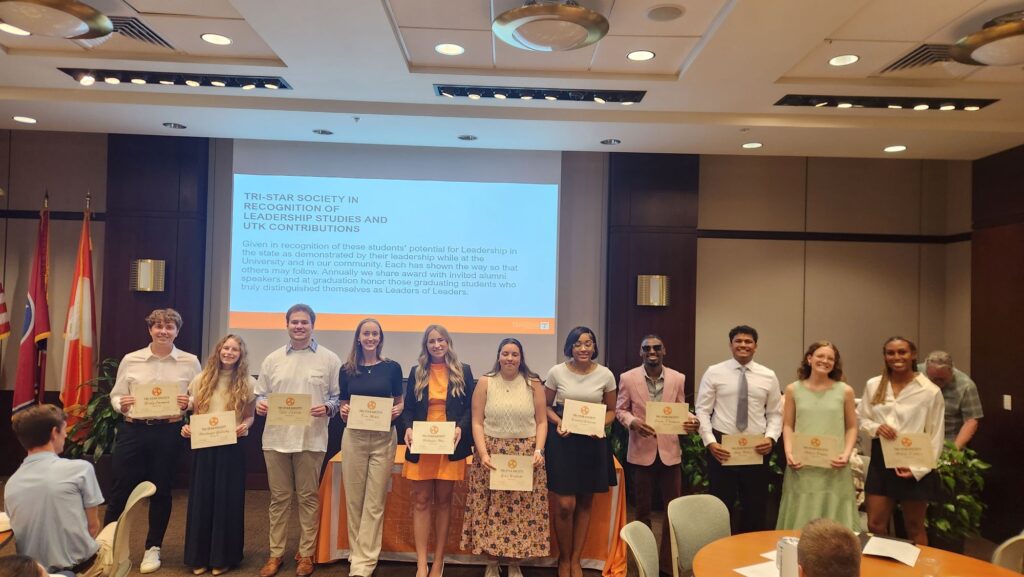

Hello! My name is M. Andrew Young. I’m a second-year Ph.D. student in the University of Tennessee, Knoxville Evaluation, Statistics, and Methodology (ESM) program in the Educational Leadership and Policy Studies (ELPS) department. In addition to my educational journey here at the University of Tennessee, I am also a higher education assessment director at East Tennessee State University in their College of Pharmacy.

Evaluation practices are becoming increasingly utilized across many industries. The American Evaluation Association (AEA) lists general industries on its careers page (Consulting, Education/Teaching/Administration, Government/Civil Service, Healthcare/Health Services, Non-profit/Charity, Other) (Evaluation Jobs – American Evaluation Association, n.d.). A quick Google search also indicates numerous other business-related evaluation opportunities (What Industries Employ Evaluators – Google Search, 2024).

Why do I bother stating the obvious? Evaluation is everywhere!

Simple. As an emerging evaluator (this is a whole different discussion, but in short, I’m newer to the field, so I’m “emerging”), it is important to critically reflect upon what it means to be an evaluator as a professional identity. Medical doctors have “M.D.” or “D.O.” degrees, and after an initial licensing process, they have an ongoing licensure examination as well as continuing education and conduct requirements (FSMB | About Physician Licensure, 2024). Physical Engineers have similar requirements such as an initial licensure requirement and continuing education (Maintaining a License, 2024). Pharmacists also have to pass licensure examinations (one national exam, the NAPLEX, and one state-specific exam, the MPJE) and also have continuing education requirements (Pharmacist Licensing Requirements & Service | Harbor Compliance | www.Harborcompliance.Com, 2024).

Why do they do this? Education helps, experience helps, but why do these few aforementioned professions require licensure and continuing education as part of their right to practice their profession?

Professional identity is an important function of entrustment given to a profession, and I pose the question: Can licensure with a continuing education requirement support that trust given to evaluators? It may be time to consider to what extent credentialing would support entrustment by those affected by or participating in evaluation activities.

In the “early days” of the AEA in the 1990’s, the subject of credentialing was broached, and there was such sharp dissent about how to handle this, that AEA pushed back addressing that until their formed their AEA Competency Task Force. In 2015, “The Task Force believed that without AEA agreement about what competencies were essential, it was premature to decide how these competencies would be measured and monitored. Efforts such as the viability and value of adopting a credentialing or assessment system can be the task of working groups that follow ours” (Tucker et al., 2020).

AEA is in good company without a licensure or credentialing requirement as they follow the example of other major evaluation societies that do not also require or offer credentialing (to my knowledge, only the CES and JES offer this at this point in time) (Altschuld, 1999; Ayoo et al., 2020; Tucker et al., 2020).

What does it mean to me?

In a rather pragmatic sense, a credentialing requirement would add a barrier to entry that would protect the economy of evaluation. A continuing education requirement would help make sure that practitioners in evaluation are also keeping current, and a conduct policy would help ensure ethical practice of evaluation. All-in-all, it would hopefully maintain the quality of the profession. While I have not explored how pervasive “bad” evaluation practice is, the more people doing evaluation as it continues to grow as a practice could open the doors for inexperienced and unknowledgeable evaluators to practice. “What about people who are doing some evaluation work for employers but aren’t a ‘professional’ evaluator?” you may ask. Good question. I’ll answer: people who do evaluation work as a part of their private employment would not be required to be licensed or credentialed, but having a license or credential might give them leverage to advance their careers and get compensated commensurate with their abilities. One does not have to be licensed in a software language to use it in the context of employment, but a person with a credential (usually in the form of a certificate embedded in a degree program) in software languages can ask for more compensation because they have demonstrated competence and thereby their employer can give entrustment to them to perform the tasks they will be asked to complete.

Credentialing has been touted to do much more to the profession than what I listed above, and for that, here is a cool resource to read on this:

- Ayoo, S., Wilcox, Y., LaVelle, J. M., Podems, D., & Barrington, G. V. (2020). Grounding the 2018 AEA Evaluator Competencies in the Broader Context of Professionalization. New Directions for Evaluation, 2020(168), 13–30. https://doi.org/10.1002/ev.20440

What’s the downside?

Well, like anything, there can be negative implications to credentialing. First, a credentialing body must be formed; second, credentialing requirements must be developed, adopted, and implemented. Then there is the question of what to do with the evaluators already practicing in the field? Then the licensure examination must be maintained. The list goes on, and formalizing the credentialing of evaluators can get very expensive and become a very large endeavor. Pharmacy faced this change when the industry moved from a bachelor’s degree requirement to a PharmD program in 1997. Their solution was to allow BPharm and previously-licensed pharmacists to continue to practice, and the accrediting body allowed colleges of pharmacy to offer two-year “upgrades” from a BPharm to a PharmD program for pre-existing licensed pharmacists (Supapaan et al., 2019).

Second, and just as important, how do we design and implement a credentialing process that is both equitable and sustainable?

Conclusion:

Harkening back to the Shakespearean title, I leave you with this:

“To evaluate, or to be evaluated, that is the question:

Whether ‘tis nobler in the mind to suffer the slings and arrows of a burdensome credentialing process,

Or to take arms against the lack of professional identity,

And by adopting a credentialing process, end them.

To credential – to license.

No more; and by credentialing process to say we end the confusion of ‘who am I?’ and the thousand questions of entrustment that our profession is heir to: ‘tis a consummation devoutly to be wish’d.”

References:

Altschuld, J. W. (1999). The Certification of Evaluators: Highlights from a Report Submitted to the Board of Directors of the American Evaluation Association. American Journal of Evaluation, 20(3), 481–493. https://doi.org/10.1177/109821409902000307

Ayoo, S., Wilcox, Y., LaVelle, J. M., Podems, D., & Barrington, G. V. (2020). Grounding the 2018 AEA Evaluator Competencies in the Broader Context of Professionalization. New Directions for Evaluation, 2020(168), 13–30. https://doi.org/10.1002/ev.20440

Clarke, P. A. (2009). Leadership, beyond project management. Industrial and Commercial Training, 41(4), 187–194. https://doi.org/10.1108/00197850910962760

Evaluation Jobs—American Evaluation Association. (n.d.). Retrieved March 23, 2024, from https://careers.eval.org?site_id=22991

FSMB | About Physician Licensure. (2024). https://www.fsmb.org/u.s.-medical-regulatory-trends-and-actions/guide-to-medical-regulation-in-the-united-states/about-physician-licensure/

Gill, S., Kuwahara, R., & Wilce, M. (2016). Through a Culturally Competent Lens: Why the Program Evaluation Standards Matter. Health Promotion Practice, 17(1), 5–8. https://doi.org/10.1177/1524839915616364

Jarrett, J. B., Berenbrok, L. A., Goliak, K. L., Meyer, S. M., & Shaughnessy, A. F. (2018). Entrustable Professional Activities as a Novel Framework for Pharmacy Education. American Journal of Pharmaceutical Education, 82(5), 6256. https://doi.org/10.5688/ajpe6256

Kumas-Tan, Z., Beagan, B., Loppie, C., MacLeod, A., & Frank, B. (2007). Measures of Cultural Competence: Examining Hidden Assumptions. Academic Medicine, 82(6). https://journals.lww.com/academicmedicine/fulltext/2007/06000/measures_of_cultural_competence__examining_hidden.5.aspx

Liphadzi, M., Aigbavboa, C. O., & Thwala, W. D. (2017). A Theoretical Perspective on the Difference between Leadership and Management. Creative Construction Conference 2017, CCC 2017, 19-22 June 2017, Primosten, Croatia, 196, 478–482. https://doi.org/10.1016/j.proeng.2017.07.227

Maintaining a License. (2024). National Society of Professional Engineers. https://www.nspe.org/resources/licensure/maintaining-license

Pharmacist Licensing Requirements & Service | Harbor Compliance | www.harborcompliance.com. (2024). https://www.harborcompliance.com/pharmacist-license

SenGupta, S., Hopson, R., & Thompson-Robinson, M. (2004). Cultural competence in evaluation: An overview. New Directions for Evaluation, 2004(102), 5–19. https://doi.org/10.1002/ev.112

Supapaan, T., Low, B. Y., Wongpoowarak, P., Moolasarn, S., & Anderson., C. (2019). A transition from the BPharm to the PharmD degree in five selected countries. Pharmacy Practice, 17(3), 1611. https://doi.org/10.18549/PharmPract.2019.3.1611

Tucker, S. A., Barela, E., Miller, R. L., & Podems, D. R. (2020). The Story of the AEA Competencies Task Force (2015–2018). New Directions for Evaluation, 2020(168), 31–48. https://doi.org/10.1002/ev.20439

what industries employ evaluators—Google Search. (2024).

What Is Leadership? | Definition by TechTarget. (n.d.). CIO. Retrieved March 17, 2024, from https://www.techtarget.com/searchcio/definition/leadership

My name is Austin Boyd, and I am a researcher, instructor, and ESM alumni.

My name is Austin Boyd, and I am a researcher, instructor, and ESM alumni.  Hello! My name is Nicole Jones, and I am a 2022 graduate of the Evaluation, Statistics, and Methodology (ESM) PhD program at the University of Tennessee, Knoxville (UTK). I currently work as the Assessment & Accreditation Coordinator in the College of Veterinary Medicine (CVM) at the University of Georgia (UGA). I also teach online, asynchronous program evaluation classes for UTK’s Evaluation, Statistics, & Methodology PhD and Evaluation Methodology MS programs. My research interests include the use of artificial intelligence in evaluation and assessment, competency-based assessment, and outcomes assessment.

Hello! My name is Nicole Jones, and I am a 2022 graduate of the Evaluation, Statistics, and Methodology (ESM) PhD program at the University of Tennessee, Knoxville (UTK). I currently work as the Assessment & Accreditation Coordinator in the College of Veterinary Medicine (CVM) at the University of Georgia (UGA). I also teach online, asynchronous program evaluation classes for UTK’s Evaluation, Statistics, & Methodology PhD and Evaluation Methodology MS programs. My research interests include the use of artificial intelligence in evaluation and assessment, competency-based assessment, and outcomes assessment. Hi! My name is Jennifer Ann Morrow and I’m an Associate Professor in Evaluation Statistics and Methodology at the University of Tennessee-Knoxville. I have been training emerging assessment and evaluation professionals for the past 22 years. My main research areas are training emerging assessment and evaluation professionals, higher education assessment and evaluation, and college student development. My favorite classes to teach are survey research, educational assessment, program evaluation, and statistics.

Hi! My name is Jennifer Ann Morrow and I’m an Associate Professor in Evaluation Statistics and Methodology at the University of Tennessee-Knoxville. I have been training emerging assessment and evaluation professionals for the past 22 years. My main research areas are training emerging assessment and evaluation professionals, higher education assessment and evaluation, and college student development. My favorite classes to teach are survey research, educational assessment, program evaluation, and statistics.  Dr. Brian

Dr. Brian