Reflecting on a Decade After ESM: My Continuing Journey as an Evaluation Practitioner and Scholar

By Tiffany Tovey, Ph.D.

Greetings, fellow explorers of evaluation! I’m Tiffany Tovey, a fellow nerd, UTK alum, and practitioner on a constantly evolving professional and personal journey, navigating the waters with a compass called reflective practice. Today, I’m thrilled to reflect together with you on the twists and turns of my journey as an evaluation practitioner and scholar in the decade since I defended my dissertation and offer some insights for you to consider in your own work.

My Journey in Evaluation

Beginning the unlearning process. The seeds of my journey into social science research were sown during my undergraduate years as a first-generation college student at UTK, where I pursued both philosophy and psychology for my bachelor’s degree. While learning about the great philosophical debates and thinkers, I was traditionally trained in experimental and social psychology under the mentorship of Dr. Michael Olson. This rigorous foundation of exploring knowledge and inquiry provided me with a foundational perspective on what was to come. I learned the importance of asking questions, embracing fallibilism, and appreciating the depth of what I now call reflective practice. Little did I know, this foundation really set the stage for my immersion into the world of evaluation, starting with the Evaluation, Statistics, and Methodology (ESM) program at UTK.

Upon entering ESM for my Ph.D., I found myself in the messy, complex, and dynamic realm of applying theory to practice. Here, my classical training in positivist, certainty-oriented assumptions was immediately challenged (in ways I am still unlearning to this day), and my interests in human behavior and reflective inquiry found a new, more nuanced, context-oriented environment to thrive. Let me tell you about lessons I learned from three key people along the way:

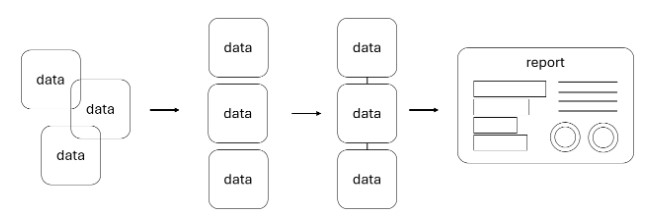

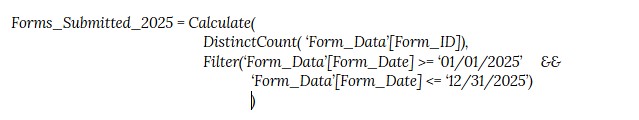

- Communicating Data/Information: Statistics are tools for effectively communicating about and reflecting on what we know about what is and (sometimes) why it is the way it is. Dr. Jennifer Ann Morrow played a pivotal role in shaping my understanding of statistics and its application in evaluation. Her emphasis on making complex statistical information accessible and meaningful to students, clients, and other audiences has stuck with me.

As important as statistics are, so too are words—people’s lived experiences, which is why qualitative research is SO important in our work, something that all my instructors helped to instill in me in ESM. I can’t help it; I’m a word nerd. Whether qualitative or quantitative, demystifying concepts, constructs, and contexts, outsmarting software and data analysis programs, and digesting and interpreting information in a way that our busy listeners can understand and make use of is a fundamental part of our jobs.

- Considering Politics and Evaluation Use: Under the mentorship of Dr. Gary Skolits, a retired ESM faculty member and current adjunct faculty member at UTK, I began to understand the intricate dances evaluators navigate in the realms of politics and the use of evaluation findings. His real-talk and guidance helped prepare me for the complexities of reflective practice in evaluation, which became the focus of my dissertation. Upon reflection, I see my dissertation work as a continuation of the reflective journey I began in my undergraduate studies, and my work with Gary as a fine-tuning and clarification of the critical role of self-awareness, collaboration, facilitation, and tact in the evaluation process.

- The Key Ingredient – Collaborative Reflective Practice: My journey was deepened by my engagement with Dr. John Peters, another now-retired faculty member from UTK’s College of Education Health and Human Sciences, who introduced me to the value of collaborative reflective practice through dialogue and systematic reflective processes. His teachings seeded my belief that evaluators should facilitate reflective experiences for clients and collaborators, fostering deeper understandings, cocreated learning, and more meaningful outcomes (see the quote by John himself below… and think about the ongoing role of the evaluator during the lifecycle of a project). He illuminated the critical importance of connecting theory to practice through reflective practice—a transformative activity that occupies the liminal space between past actions and future possibilities. This approach encourages us to critically examine the complexities of practice, thereby directly challenging the uncritical acceptance of the status quo.

My post-PhD journey. I currently serve as the director of the Office of Assessment, Evaluation, and Research Services and teach program evaluation, qualitative methods, reflective practice, interpersonal skills, and just-in-time applied research skills to graduate and undergraduate students at UNC Greensboro. Here, I apply my theoretical knowledge to real-world evaluation projects, managing graduate students and leading them on their professional evaluation learning journey. Each project and collaboration has been an opportunity to apply and refine my understanding of reflective practice, effective communication, and the transformative power of evaluation.

My role at UNCG has been a continued testament to the importance of reflective practice. The need for intentional reflective experiences runs throughout my role as a director of OAERS, lead evaluator and research on sponsored projects, mentorship and scaffolding with students, and as a teacher. Building in structured time to think, unpack questions and decisions together, and learn how to go on more wisely is a ubiquitous need. Making space for reflective practice means leveraging the ongoing learning and unlearning process that defines the contours of (1) evaluation practice, (2) evaluation scholarship, and (3) let’s be honest… life itself!

Engaging with Others: The Heart of Evaluation Practice

As evaluators, our work is inherently collaborative and human centered. We engage with diverse collaborators and audiences, each bringing their unique perspectives and experiences to the table. In this complex interplay of voices, it’s essential that we—evaluators—foster authentic encounters that lead to meaningful insights and outcomes.

In the spirit of Martin Buber’s philosophy, I try to approach my interactions with an open heart and mind, seeking to establish a genuine connection with those I work with. Buber reminds us that “in genuine dialogue, each of the participants really has in mind the other or others in their present and particular being and turns to them with the intention of establishing a living mutual relation between himself and them” (Buber, 1965, p. 22). This perspective is foundational to my practice, as it emphasizes the importance of mutual respect and understanding in creating a space for collaborative inquiry and growth.

Furthermore, embracing a commitment to social justice is integral to my work as an evaluator. Paulo Freire’s insights resonate deeply with me:

Dialogue cannot exist, however, in the absence of a profound love for the world and for people. The naming of the world, which is an act of creation and re-creation, is not possible if it is not infused with love. Love is at the same time the foundation of dialogue and dialogue itself. (Freire, 2000, p. 90)

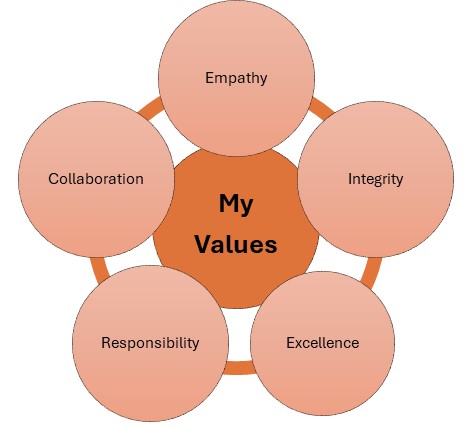

This principle guides me in approaching each evaluation project with a sense of empathy and a dedication to promoting equity and empowerment through my work.

Advice for Emerging Evaluators

- Dive in and embrace the learning opportunities that come your way.

- Reflect on your experiences and be honest with yourself.

- Remember, evaluation is about people and contexts, not just techniques and tools.

- Leverage your unique personality and lived experience in your work.

- Never underestimate the power of effective, authentic communication… and networking.

- Most importantly, listen to and attend to others—we are a human-serving profession geared towards social betterment. Be in dialogue with your surroundings and those you are in collaboration with. View evaluation as a reflective practice, and your role as a facilitator of that process. Consider how you can leverage the perspectives of Buber and Freire in your own practice to foster authentic encounters and center social justice in your work.

Conclusion and Invitation

My journey as an evaluation scholar is a journey of continuous learning, reflection, and growth. As I look to the future, I see evaluation as a critical tool for navigating the complex challenges of our world, grounded in reflective practice and a commitment to the public good. To my fellow evaluators, both seasoned and emerging, let’s embrace the challenges and opportunities ahead with open minds and reflective hearts. And to the ESM family at UTK, know that I am just an email away (tlsmi32@uncg.edu), always eager to connect, share insights, and reflect further with you.

Mary Dueñas has joined the College Student Personnel (CSP) program as the new program coordinator and is an assistant professor in the Department of Educational Leadership and Policy Studies. Dueñas holds her PhD from the University of Wisconsin-Madison in Educational Leadership and Policy Analysis. With publications in the Journal of College Student Development, Journal of Latinos and Education, International Journal of Qualitative Studies in Education, and Hispanic Journal of Behavioral Sciences, Dueñas’ research focuses on the Latinx college student experience. Her interest attends to critical and social processes that affect this student population, with the intent for the findings to inform how student affairs can and should work with these students to promote their success.

Mary Dueñas has joined the College Student Personnel (CSP) program as the new program coordinator and is an assistant professor in the Department of Educational Leadership and Policy Studies. Dueñas holds her PhD from the University of Wisconsin-Madison in Educational Leadership and Policy Analysis. With publications in the Journal of College Student Development, Journal of Latinos and Education, International Journal of Qualitative Studies in Education, and Hispanic Journal of Behavioral Sciences, Dueñas’ research focuses on the Latinx college student experience. Her interest attends to critical and social processes that affect this student population, with the intent for the findings to inform how student affairs can and should work with these students to promote their success. Mary Lynne Derrington, PhD, releases new book

Mary Lynne Derrington, PhD, releases new book